|

|

#define | MMAP_INVALID NULL |

| | Returned by VMM functions in case of error.

|

| |

|

|

static vaddr_t | vma_end (const vma_t *vma) |

| | Compute the end address of a VMA.

|

| |

| static vaddr_t | vma_start (const vma_t *vma) |

| |

| bool | vmm_init (vmm_t *vmm, vaddr_t start, vaddr_t end) |

| | Initialize a VMM instance. More...

|

| |

| void | vmm_copy (vmm_t *dst, vmm_t *src) |

| | Copy the content of a VMM instance inside another one. More...

|

| |

| struct vm_segment * | vmm_allocate (vmm_t *, vaddr_t, size_t size, int flags) |

| | Allocate a virtual area of the given size. More...

|

| |

| void | vmm_free (vmm_t *, vaddr_t, size_t length) |

| | Free a virtual address. More...

|

| |

| error_t | vmm_resize (vmm_t *, vma_t *, size_t) |

| | Change the size of a VMA. More...

|

| |

| struct vm_segment * | vmm_find (const vmm_t *, vaddr_t) |

| | Find the VMA to which a virtual address belongs. More...

|

| |

| void | vmm_clear (vmm_t *vmm) |

| | Release all the VMAs inside a VMM instance. More...

|

| |

| void | vmm_destroy (vmm_t *vmm) |

| | Free the VMM struct. More...

|

| |

| void * | map_file (struct file *file, int prot) |

| | Map a file into kernel memory. More...

|

| |

| error_t | unmap_file (struct file *file, void *addr) |

| | Delete a file's memory mapping. More...

|

| |

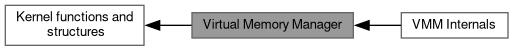

VMM

Description

The virtual memory manager is responsible for handling the available virtual addresses.

It has to keep track of which virtual addresses have already been allocated, as well as per-region flags and parameters (file-mapped, kernel, mmio, ...). This is done per-process, each one having their own VMM.

This should be dinstinguished from the PMM and MMU. All three work together, but serve their own respective purpose. Please refer to the corresponding header and code for information about those.

Implementation

The virtual memory allocator keeps track not of singular addresses, but of Virtual Memory Areas (VMAs).

We store these areas inside an AVL tree, as it allows for faster lookup operations. We use two different trees to store these, one ordered by the size of the area, the other ordered by its address. The areas inside both trees are the same, we chose between them depending on the type of operation we need to perform.

For allocating the structs which constitute these trees, we reserve a 32kiB area within the current virtual space which will be used only to address our underlying structs.

- Note

- This is only the first design. The way we handle memory regions will surely change in the future as I learn more and more features are implemented in the kernel. Here's a exhaustive list of things that are subject to change:

- Should we keep using 2 trees: twice as long to modify, but lookup is faster

- One region per allocation? Can't it grow too big?

- Keep a track of the pageframes inside the regions will become necessary

- Getting rid of the bitmap inside the VMM struct (how?)

◆ map_file()

| void * map_file |

( |

struct file * |

file, |

|

|

int |

prot |

|

) |

| |

- Parameters

-

| file | The file to be mapped |

| prot | Protection flags for the mapping. Must be a combination of mmu_prot |

◆ unmap_file()

| error_t unmap_file |

( |

struct file * |

file, |

|

|

void * |

addr |

|

) |

| |

- Parameters

-

| file | The memory mapped file |

| addr | The starting address of the mapped memory |

The starting address MUST be page aligned (EINVAL).

- Returns

- A value of type error_t

@info Every page that the range is inside of will be unmaped, even if it's only for one byte. Beware of overflows and mind the alignment!

◆ vma_start()

| static vaddr_t vma_start |

( |

const vma_t * |

vma | ) |

|

|

inlinestatic |

- Returns

- the start address of a VMA.

◆ vmm_allocate()

| struct vm_segment * vmm_allocate |

( |

vmm_t * |

vmm, |

|

|

vaddr_t |

addr, |

|

|

size_t |

size, |

|

|

int |

flags |

|

) |

| |

The minimum addressable size for the VMM is a page. The size of the allocated area will automatically be rounded to the next page size.

You can specify a minimum virtual address to use for the area with the addr parameter. If not NULL the returned address is guaranted to be located at or after the specified one, else the kernel will chose one.

- Parameters

-

| vmm | The VMM instance to use |

| addr | Starting address for the allocated area. |

| size | The size of the requested area |

| flags | Feature flags used for the allocated area. Must be a combination of vma_flags |

- Returns

- The virtual start address of the area, or NULL

◆ vmm_clear()

| void vmm_clear |

( |

vmm_t * |

vmm | ) |

|

- Warning

- This does not release the actual virtual addresses referenced by the VMAs, please make sure to release it at some point.

◆ vmm_copy()

This function only copies the VMM's metadata. The actual content of the address space managed by the VMM should be duplicated using the CoW mechanism (

- See also

- mmu_clone).

◆ vmm_destroy()

| void vmm_destroy |

( |

vmm_t * |

vmm | ) |

|

- Note

- You should release its content using vmm_clear before calling this function

◆ vmm_find()

- Returns

- The VMA containing this address, or NULL if not found

◆ vmm_free()

| void vmm_free |

( |

vmm_t * |

vmm, |

|

|

vaddr_t |

addr, |

|

|

size_t |

length |

|

) |

| |

- Warning

- This does not free the underlying page nor the PTE entry. All it does is mark the corresponding VMA as available for later allocations.

◆ vmm_init()

| bool vmm_init |

( |

vmm_t * |

vmm, |

|

|

vaddr_t |

start, |

|

|

vaddr_t |

end |

|

) |

| |

- Parameters

-

| vmm | The VMM instance |

| start | The starting address of the VMM's range |

| end | The end address of the VMM's range (excluded) |

- Returns

- Whether the init processes suceeded or not

◆ vmm_resize()

| error_t vmm_resize |

( |

vmm_t * |

vmm, |

|

|

vma_t * |

vma, |

|

|

size_t |

new_size |

|

) |

| |

When increasing the VMA's size, if the required virtual memory is already being used, this fuction returns E_NOMEM.

- Parameters

-

| size | The new size of the VMA |

◆ kernel_vmm

These addresses are stored in the PTEs above KERNEL_VIRTUAL_START, and are shared across all processes. That is why we must use a global shared VMM.